Conduktor Trust

Democratising data quality

Data quality management needed deep technical expertise just to set up basic quality checks, and these checks lived in isolation where nobody could discover or reuse them. This technical barrier was preventing data quality from becoming a shared organisational practice. We designed a solution that democratises this process to non-technical users, promoting collaboration and reuse.

Our previous approach to managing data quality created barriers that prevented teams from effectively ensuring reliable data. Setting up data quality checks required deep technical knowledge and complex configuration files, meaning only technical experts could create or modify these checks.

The bigger issue is that these quality checks existed in isolation - teams couldn't easily see what checks already exist, share successful approaches, or understand how quality requirements connected to business needs.

We wanted to tackle this challenge, turning data from an afterthought to a core consideration when building data products.

Research: Exploring different product structures

Like all good projects, we started on a whiteboard, keeping things as open and rough as possible while we were still brainstorming approaches.

Our whiteboard to explore initial ideas.

We made sure to involve the engineers at this stage, to understand if there would be any technical limitations on the front or backend before starting.

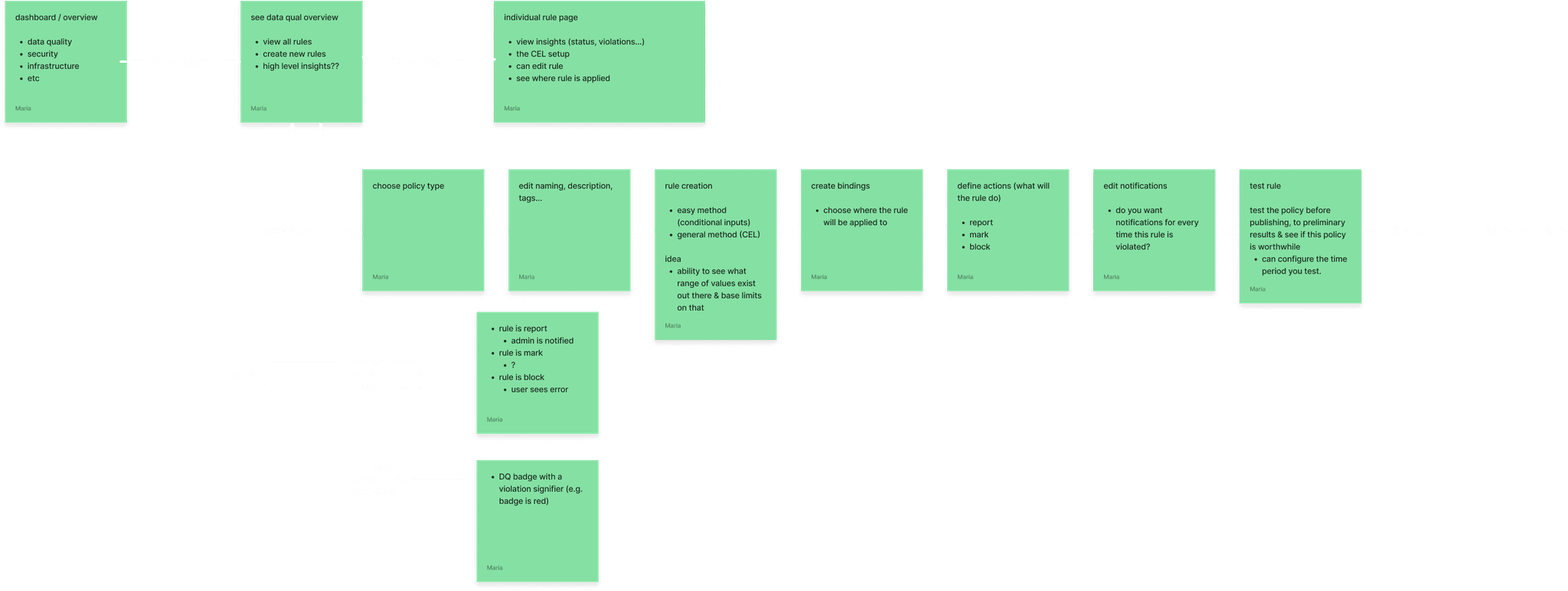

A refinement of our initial whiteboard sketches in Figjam.

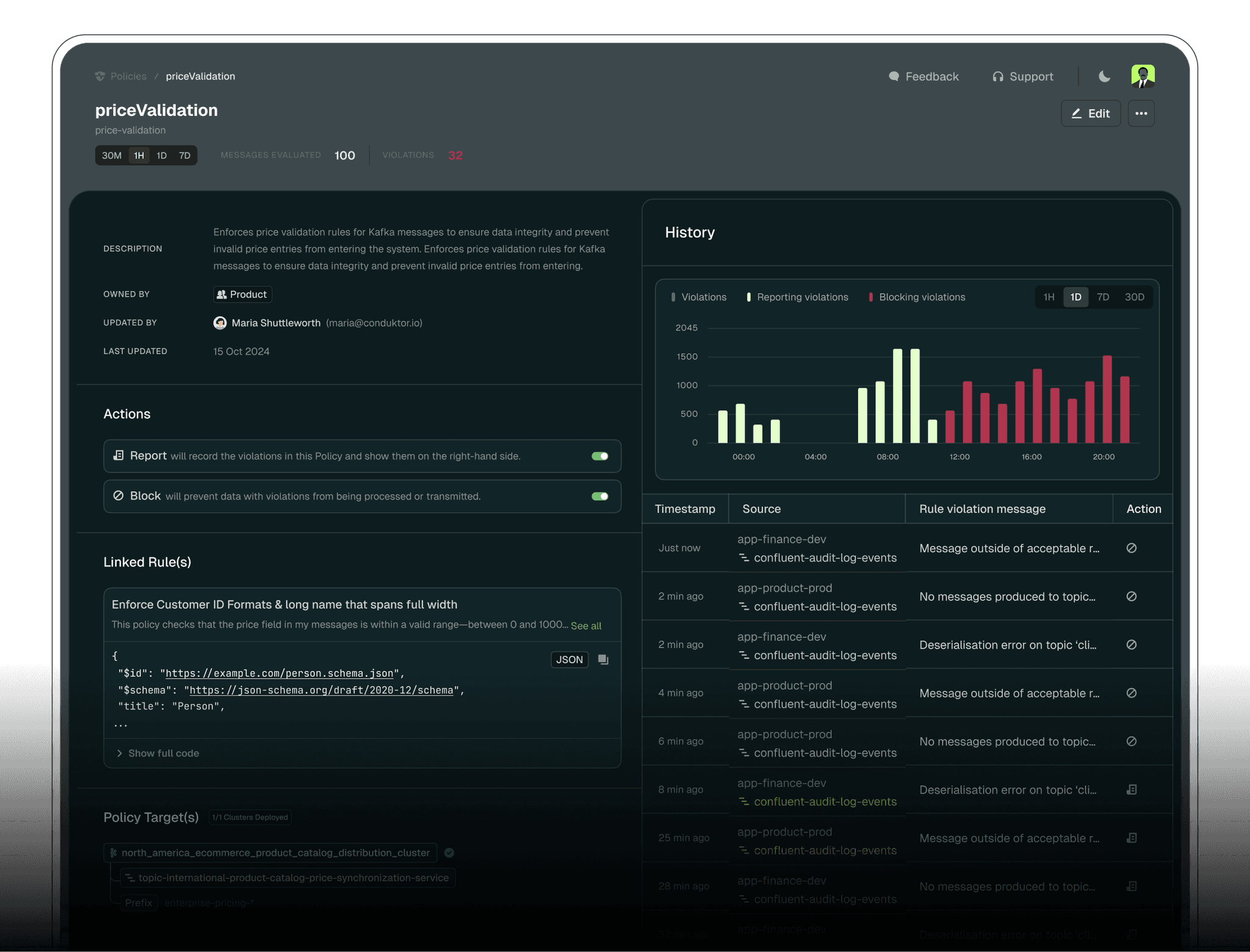

Based on this exploration we were sure of a couple of things. The first was that it made sense to split things up into two steps: Rules and Policies.

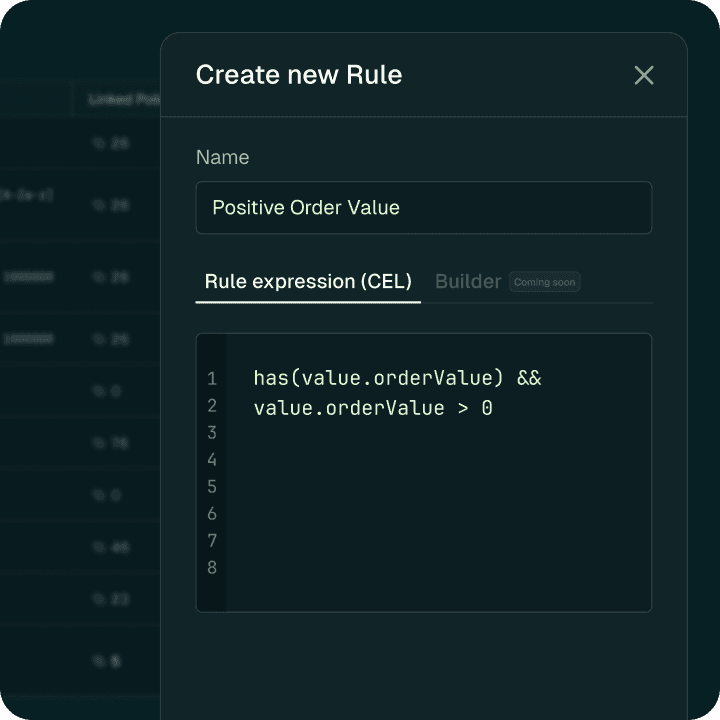

Step 1

Define a Rule. Use CEL (Common Expression Language) to write clear, precise rules that enforce structure, completeness, and conformance. These are the backbone of a Policy, essentially telling it what to do, and when.

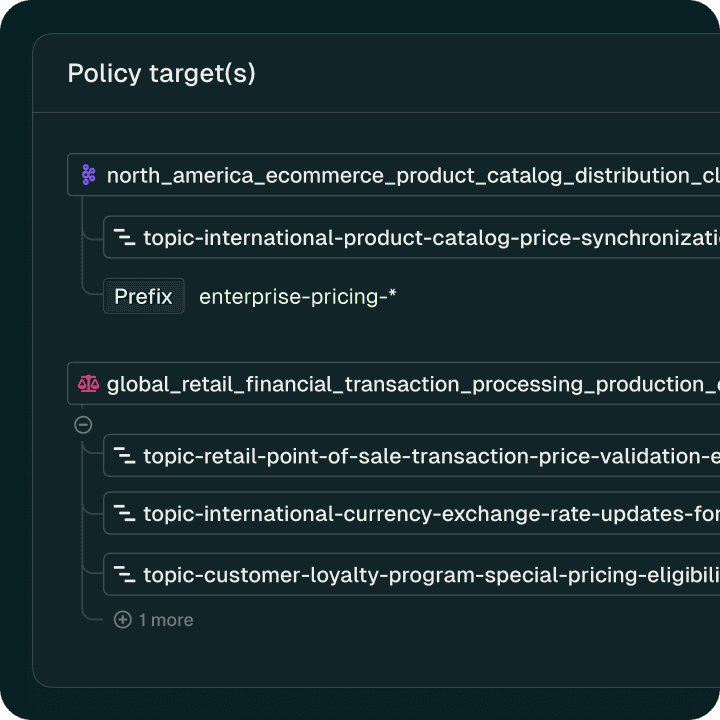

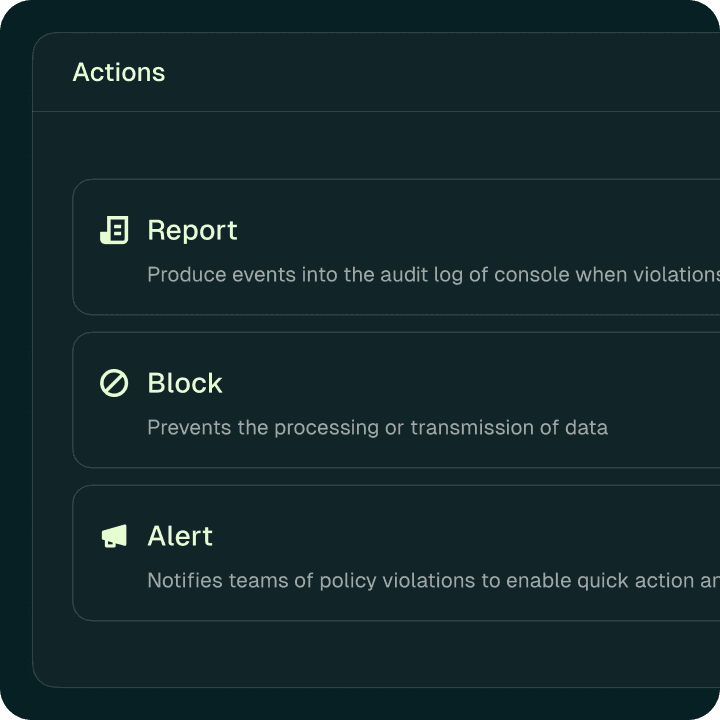

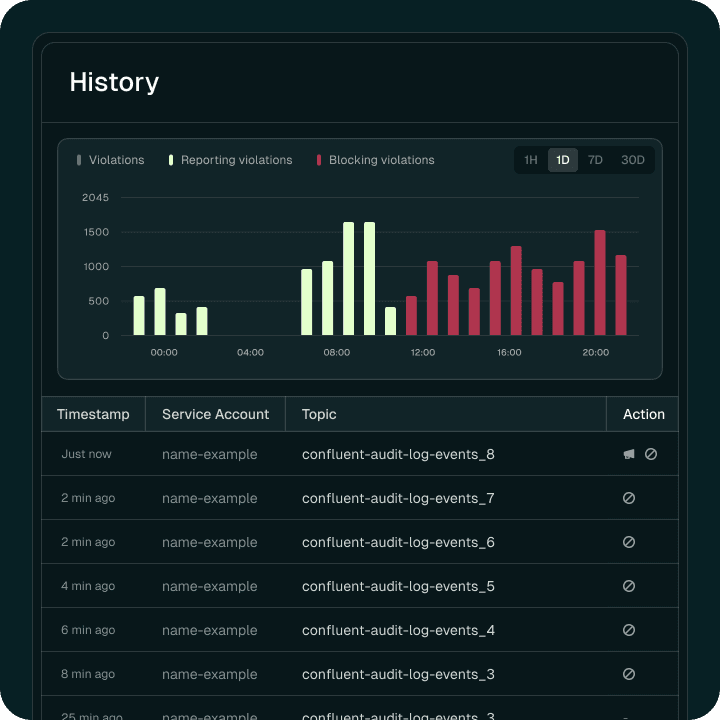

Step 2

Create a Policy. A Policy consists of attached Rules, a Target, and Actions. All these combined allow you to administer rules across your entire Kafka environment, rather than devolving responsibilities to teams or individuals. This helps encourage best practices around visibility and data quality while reducing risk and increasing compliance.

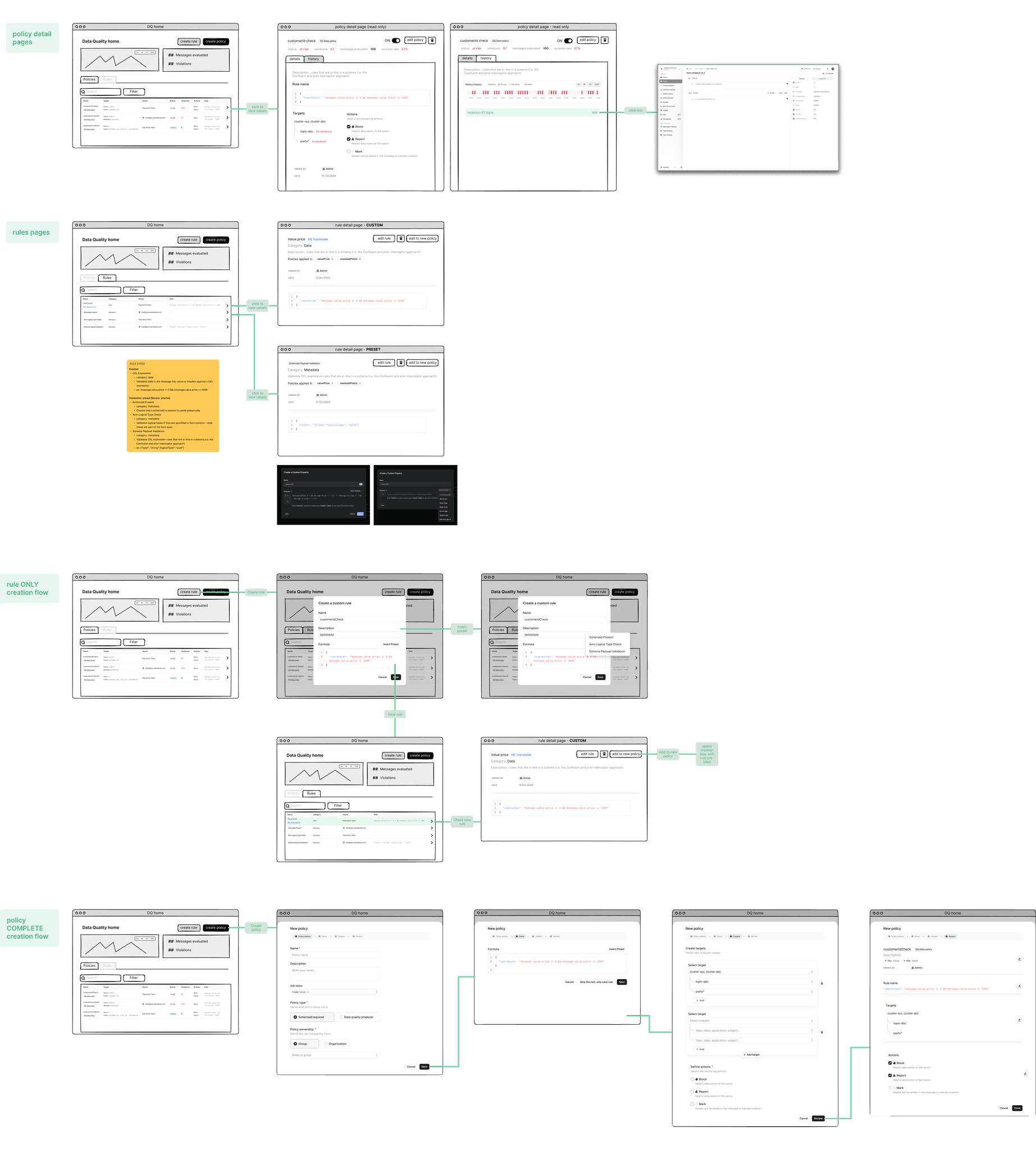

Designing: Keeping it low fi

This was a fairly tricky design, and one with a lot of back and forth, especially with how to display Rules and Policies and make them feel distinct yet related.

There was also a lot of ambiguity surrounding the project as the PM was on annual leave, so I was working directly with the CTO and Head of Design. I combatted the ambiguity in requirements and PRDs by keeping the project as low fidelity as possible for as long as possible, until I was sure that everyone was happy.

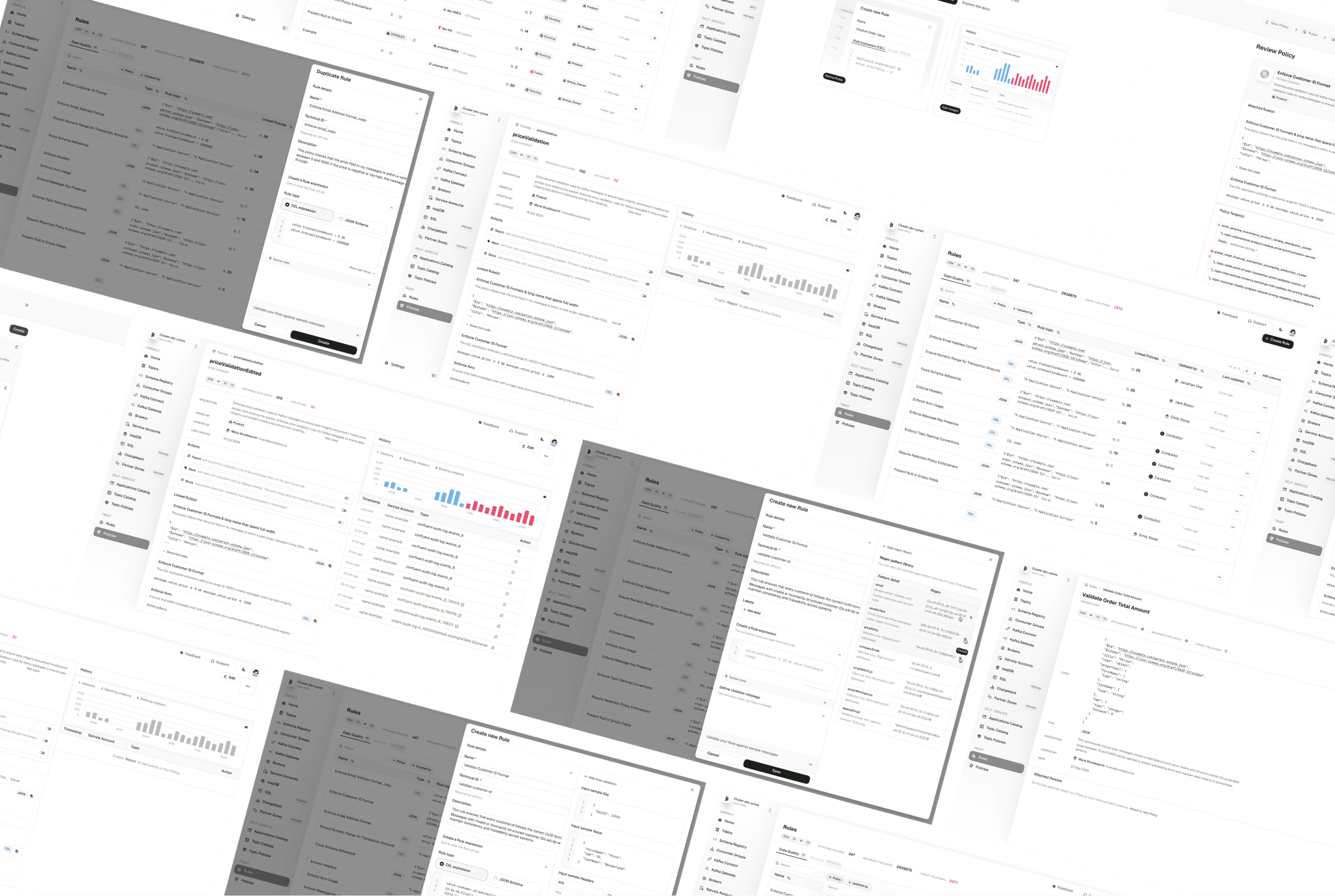

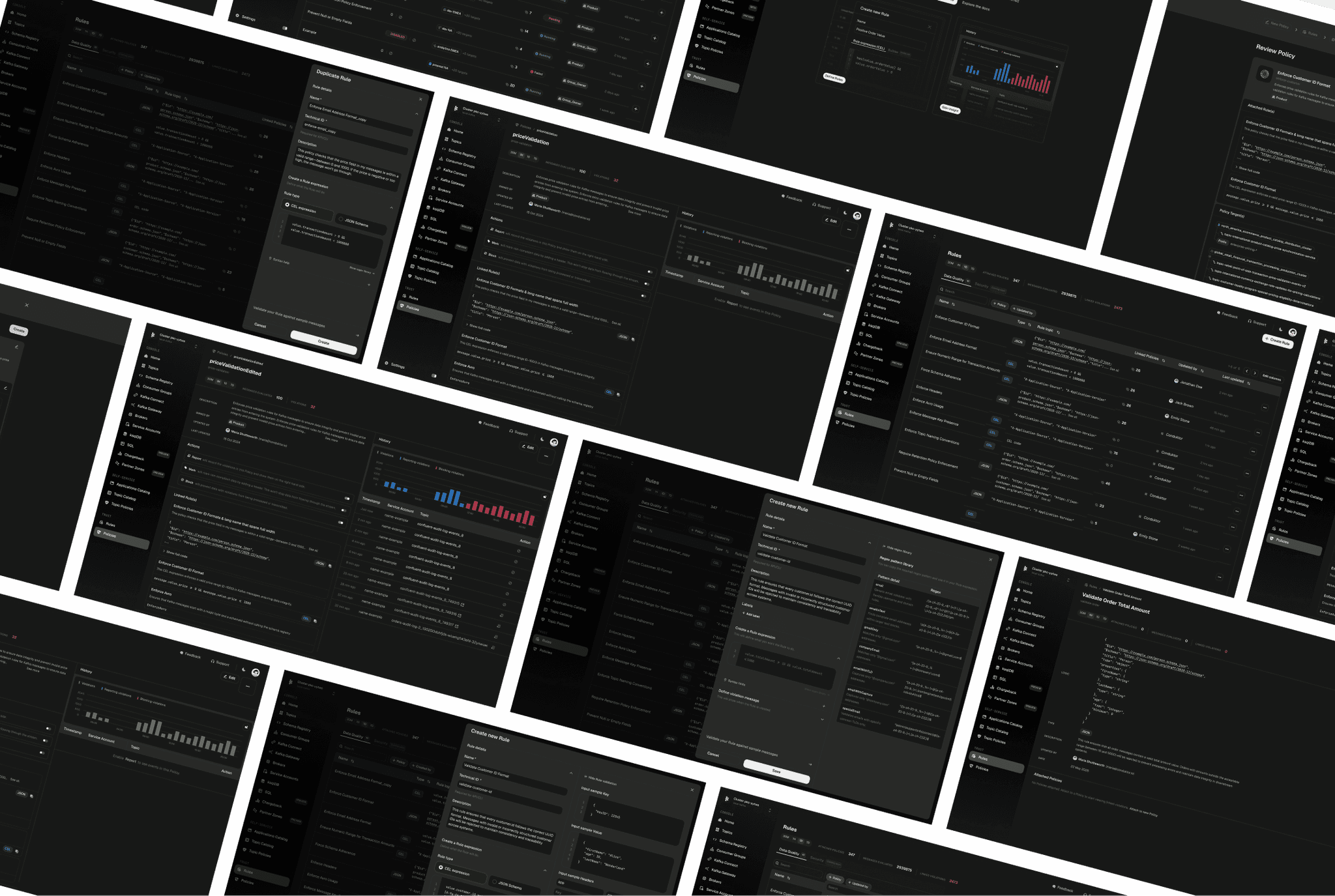

My work in Figma slowly figuring out the UI, using basic building blocks and trying to keep it low fi while still in the exploration phase.